In the field of artificial intelligence, multimodal learning has always been a cutting-edge and challenging research direction. Recently, the collaboration between the University of Hong Kong (HKU) and ByteDance has introduced a new multimodal large model, drawing widespread attention in both academia and industry. This partnership represents not only a technological breakthrough but also a model of cross-disciplinary collaboration.

Technological Breakthrough: A New Paradigm in Multimodal Large Models

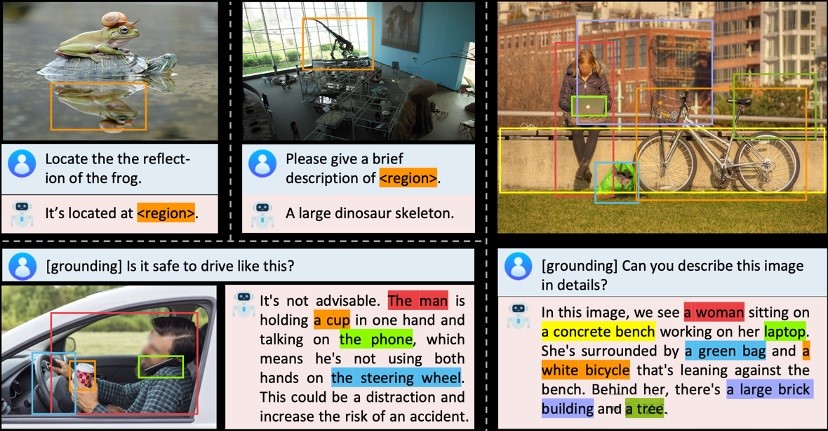

According to reports, the multimodal large model proposed by HKU and ByteDance, named Groma, enhances the model's perceptual localization capability through regional image encoding. This innovation allows the model to directly associate textual content with specific image regions, significantly improving the interactivity and referentiality of dialogues. This "perception before cognition" paradigm simulates the human visual process, opening up new possibilities for the application of multimodal large models.

Figure: HKU and ByteDance Jointly Launch New Multimodal Large Model Groma

In-Depth Analysis: Dual Innovation in Technology and Application

1. Technological Innovation: The core of the Groma model lies in its regional image encoding technology. This technique allows the model to perceive and understand specific areas within an image, rather than being limited to an overall image understanding. This is particularly valuable in fields such as image editing, autonomous driving, and robotic control.

2. Application Innovation: By enhancing the model's perceptual localization capabilities, Groma can support more complex and precise tasks, such as pixel-level image segmentation and accurate object localization. This provides more possibilities for the practical application of multimodal large models.

3. Computational Efficiency: The design of the Groma model takes computational efficiency into account. Through a segregated design, the model can maintain high-resolution feature maps while reducing computational load, which is crucial for resource-constrained real-world applications.

Challenges and Opportunities

Despite the technological breakthroughs brought by the Groma model, it also faces several challenges:

1. Data Requirements: High-quality regional image encoding requires large amounts of training data. Acquiring, labeling, and utilizing this data is a significant aspect of model development.

2. Generalization Ability: While the model performs excellently on specific tasks, its generalization ability—how well it performs across various scenarios and tasks—needs further validation.

3. Ethics and Privacy: As the model's perceptual capabilities increase, ensuring user data security and privacy, while avoiding potential ethical risks, is a critical concern during development.

Conclusion and Outlook

The multimodal large model Groma, jointly proposed by HKU and ByteDance, exemplifies technological innovation and cross-disciplinary collaboration. It advances the field of multimodal learning and offers new tools and approaches for practical applications. However, with technological advancements, it is essential to address challenges such as data requirements, generalization ability, and ethical and privacy issues. In the future, we look forward to the Groma model overcoming these challenges and bringing more innovation and breakthroughs to the field of artificial intelligence.